Section 1: Introduction [Note: this is an evolving document which I intend to update and improve upon periodically. Feel free to use the contact page to send me input or criticisms.]

“The critical habit of thought, if usual in society, will pervade all its mores, because it is a way of taking up the problems of life. Men educated in it cannot be stampeded by stump orators … They are slow to believe. They can hold things as possible or probable in all degrees, without certainty and without pain. They can wait for evidence and weigh evidence, uninfluenced by the emphasis or confidence with which assertions are made on one side or the other. They can resist appeals to their dearest prejudices and all kinds of cajolery. Education in the critical faculty is the only education of which it can be truly said that it makes good citizens.” ― William Graham Sumner

Critical thinking, simply put, is a disciplined way of thinking that attempts to get at the truth, or evaluate the veracity of information, through the application of reason and logic. A commitment to critical thought is the cornerstone of philosophy, which itself is nothing more than the application of critical thought to the all manners of ideas, questions and problems that we encounter in life. Learning to think critically is one of the most important things anyone can learn to do. Thinking critically allows you to tell a good argument from a bad argument, or good evidence from bad evidence; to recognize errors in one’s own reasoning and the reasoning of others; to spot deceptions and con-artistry; to recognize the difference between legitimate science and shoddy science or pseudoscience; to recognize potential problems with legitimate scientific research and statistical analyses, etc.

A person who is trained to think critically will be highly resistant to the various methods of persuasion that disingenuous ideologues, salespersons, and propagandists employ, such as: using scientific jargon in an attempt to confuse or appear legitimate, or more generally, using verbosity or oratory skill in order to dazzle or give the perception of expertise or authority; the hypnotic effect of sheer repetition; making implausible claims that prey on the hopes of the desperate; employing playing on common fears; making specious claims that commit various logical fallacies; etc. Why are these abilities important? Because it will allow you to differentiate between accurate and inaccurate information, and make better choices concerning your life and the lives of others; and making better choices usually leads to living a better, more enjoyable, more rewarding, more fulfilling, more virtuous life. On a larger scale, having a population capable of critical thinking means a better democracy, and a better society.

One of the most fundamental aspects of critical thinking is to combat the tendency our tendency to construct an identity around our beliefs. All too often people become deeply emotionally invested in their opinions — because they have constructed their personal identity around them. This is evidenced by the fact that when people are asked to describe themselves they often proudly proclaim themselves to be a Christian, a Muslim, an atheist, a socialist, a capitalist, a liberal, a Democrat, a Republican, a Libertarian, etc., etc., as one of the first ways of describing who they are. Someone who is committed to critical thinking, or seeking the truth — no matter what it may be — is more reluctant to describe themselves in such terms. If pressed, a person committed to critical thought may say that such and such label best summarizes the view they hold, based on their evidence available to them, but this is always to be understood as a tentative descriptor of their current views — not as a defining characteristic of who they are as an individual. This does not mean that a person committed to philosophy or critical thought cannot ardently defend a particular position or work toward a certain cause or aim, but it does mean that they are always open to examining new evidence that may potentially change their mind.

Section 2: The Basics of Logic ― Some General Terminology and What It Is

Before we delve into what exactly logic is, let’s clarify some terminology. An argument, in the logical or philosophical sense, is not what you may think; in everyday conversation we use the term “argument” to describe a heated disagreement that two or more individuals may be having, but in philosophy an argument is any attempt by a person to make a case for some conclusion. When a person makes an argument they make statements of propositions ― which are known as premises in an argument ― that are intended to serve as a foundation that leads to, or in other words, logically entails their conclusion.

Logic is simply the study and application of the laws of inference, and with logic we can carefully analyze arguments to determine if they adequately make the case for the conclusion they arrive at. (Note: Follow the links on statements and propositions above for more clarification of these terms.) Logic is essentially a tool or a method that we can use to help us differentiate “good” arguments from “bad” arguments; in this context we are using good to mean “well argued” in a logical sense, and bad to mean fallacious (this term is covered in the next paragraph) or poorly argued in a logical sense. Logic is one of the main tools used in critical thinking, but critical thought, being a more broad concept, also involves the application of general epistemic or rational principles, such as skepticism of outrageous claims (see Sagan’s razor on the glossary page); inference to the best explanation (see Section 2.5 on abductive reasoning); conducting or utilizing empirical investigation, if at all possible; etc. These principles may overlap with logic to an extent but are in some ways separate.

(Note: there is some debate within philosophy about logical theories; the discipline that examines these theories is known as metalogic.)

To explore further what logic is and how it works let’s take a look at an example of an argument:

Premise 1 (or P1): All dogs are mammals.

Premise 2 (or P2): All mammals are vertebrates.

Conclusion (or C): All dogs are vertebrates.

The above argument is known as a syllogism, and it is both valid and sound (two terms we will cover in just a bit) so it is a good argument, that is, it is not fallacious. A fallacious argument is one in which the arguer has either committed a formal fallacy or an informal fallacy. A formal fallacy is an error in the argument’s form or structure which makes the argument invalid because it is based on an erroneous inference; an informal fallacy, on the other hand, is committed when there is an error in one’s information or reasoning which makes the argument unsound, that is, unconvincing or implausible, or simply untrue. In order to recognize fallacious arguments one must become familiar with basic logic and the different types of fallacies that can make an argument “bad”. To reiterate, fallacies come in two varieties: formal and informal; formal fallacies (aka logical fallacies) are those where the argument is constructed in such a way where the conclusion does not follow from the premises, that is, it is invalid; and an informal fallacy is committed when there is an error in the information one is working with or in one’s reasoning – which makes the argument unsound, that is, not convincing, or simply untrue.

Section 2.1: The Basics of Logic ― More Terminology and Concepts

In order to better understand terms like valid and sound, let’s look at the basics of logic. Logic is a method used to evaluate and construct arguments. Evaluating an argument logically allows you to determine if it is a valid argument, that is, if it is constructed in such a way where the form (or structure) of the argument ensures that the conclusion necessarily follows from the premises, if we assume the premises to be true. This is the first step in determining if we should consider an argument. If the argument is determined to be valid we might, in certain situations, go on to be able to determine if it is sound, that is, we may be able to determine if it actually has true premises. It is easy to mix up the concepts of validity and soundness, but they mean two different things. An argument can be valid even if it has a false premise or premises and a true or false conclusion; but if an argument is valid and has all true premises it has to have a true conclusion – because in a valid argument the conclusion necessarily follows from the premises, so that if the premises are true, so must be the conclusion. Knowing this, we can therefore know that an argument in which all the premises are true but the conclusion is false is necessarily invalid, that is, there has to be a mistake in form (i.e., a formal fallacy), because correct form would make it so that a true conclusion necessarily follows from true premises. All invalid arguments are unsound, but not all valid arguments are sound. Again, soundness, is the final step in evaluating an argument that has passed the first test – the test for correct form or validity; soundness tells us if the argument’s premises are actually true. Let’s clarify this concept with examples. Below is an example of an invalid argument:

P1: If something is a functioning bicycle, then it has exactly 2 wheels.

P2: This thing has exactly 2 wheels.

C: This thing is a bicycle.

Section 2.1.2: The Laws of Classic Logic

There are 3 laws of classic logic (aka laws of thought) that are generally considered to be axiomatic in typical discourse:

- The Law of Identity — for all a, a = a; that is, each entity is identical with itself. (Note: The Identity of Indiscernibles (aka Leibniz’s Law) is a related concept, which states that there can be no two separate entities that possess all of the same properties or predicates. For example, if x and y have all the same properties, they are not x and y, they are only x. See Max Black’s balls for an apparent or actual counter-example. Also related: the issue of universals vs. particulars in metaphysics.)

- The Law of Non-contradiction — contradictory statements cannot both be true, in the same sense, and at the same time. For example, A = B at time t1, and A ≠ B at time t1 are mutually exclusive or contradictory statements.

- The Law of the Excluded Middle — everything must either be or not be.

Section 2.2: Formal Fallacies

The above argument is invalid because it uses an incorrect method of inference called affirming the consequent. Affirming the consequent of a conditional statement (an “if…, then…” statement) is fallacious because, as demonstrated by this argument, other things can have the condition or quality described in the consequent. In this instance, more things than bicycles have exactly two wheels – mopeds, motorcycles, etc., also have two wheels. It could be the case in this argument that premises are actually true, and that the conclusion is actually true, but the argument would still be invalid because the premises do not logically entail the conclusion – you cannot deduce that something is a functioning bicycle just based on knowing premise 1 and 2. The argument could have gone wrong in a similar fashion if we committed another informal fallacy by denying the antecedent. If we denied the antecedent above in premise 2 our argument would look like this:

P1: If something is a functioning bicycle, then it has exactly 2 wheels.

P2: This thing is not a functioning bicycle.

C: This thing does not have exactly 2 wheels.

We can see here that denying the antecedent is also an incorrect method of inference; just because something doesn’t have the quality or condition expressed in the antecedent doesn’t mean it doesn’t have the quality or condition expressed in the consequent. In this instance, as was already stated, mopeds, motorcycles, etc., can also have this quality. In order to correctly make inferences using a conditional statement we must either affirm the antecedent (an method of inference known as modus ponens) with another premise – which gives us a conclusion which is the affirmation of the consequent; or we can deny the consequent (also known as modus tollens) with another premise – which gives us a conclusion which is the negation of the antecedent. Below are examples of how we could make a valid inference given the information above, using modus ponens and modus tollens.

Modus ponens:

P1: If something is a functioning bicycle, then it has exactly 2 wheels.

P2: This thing is a functioning bicycle.

C: This thing has exactly two wheels.

Modus Tollens:

P1: If something is a functioning bicycle, then it has exactly 2 wheels.

P2: This thing does not have exactly 2 wheels.

C: This thing is not a functioning bicycle.

Affirming a disjunct……

Here is an example of how an argument can be valid, but have one or more false premises and false conclusion:

P1: If something is a cat, then it must be a robot.

P2: Jones is a cat.

C: Therefore, Jones is a robot.

In this example the first premise is false, and the conclusion is also false, but the argument is constructed in logically valid way – the conclusion necessarily follows, or can be inferred from the premises of the argument. So, again, testing for validity doesn’t tell us whether the argument is factual or true, that is, that it corresponds with reality. Validity just tells us that an argument is constructed correctly, so that if the premises were true then the conclusion would be as well. Although the argument in this example is valid, it is unsound because at least the first premise is not true.

Here’s an example of a valid argument with one or more false premises and a true conclusion:

P1: All bats are birds.

P2: All (healthy, non-injured) birds can fly.

C: All (healthy, non-injured) bats can fly.

In this example both of the premises are false, but the conclusion is true (it is a fact that there are no known flightless bat species). This argument is also valid because if the premises were true the conclusion would necessarily follow. Though it is valid, this argument is also unsound because the premises are false.

Below is an example of an argument that has all true premises, but a false conclusion – just by seeing that this is the case we know it is invalid.

P1: If I drop the ball it will hit the floor.

P2: I dropped the ball.

C: Therefore the ball is floating in the air.

The conclusion of this argument does not follow from the true premises, so it has to be invalid.

This might seem very confusing, you might say, “How can an argument be valid if it is clearly false?” Well, just remember that validity is testing to see if an argument is constructed correctly – we’re not assessing the facticity (the truthfulness of it) of it at this stage. It might help to understand this when you think about a real world scenario – in many cases we don’t actually know the truth values of premises.

For instance consider this argument:

P1: If it rains today, then there will be water in the creek.

P2: It rained today.

C: Therefore, there is water in the creek.

Let’s say we we’ve been inside all day in a windowless room where we can’t hear or see rain falling, and we don’t know anything about the area, so we don’t know if P1 or P2 is actually true in this case, but if they were true, which we assume when evaluating validity, then the conclusion would necessarily follow from these premises. So, this is a valid argument. In order to determine if this is also a sound argument we must actually find out the truth values of P1 and P2 – if we find out that they are both actually true, then we have a sound argument.

Section 2.3: The Difference Between Necessary Conditions and Sufficient Conditions in Logic

In logic a necessary condition is a condition that must be present for something to be or something to occur. In the case of conditional statements, such as, “If p, then q,” q is a necessary condition for p, that is, if p is true then we know q has to be true; and in this statement q is a sufficient condition for p, which we will cover more in the next paragraph. For example, if we have a circuit made out of a battery, 2 wires, and a light bulb, we could form this statement: “If the light bulb is lit up, then the circuit is complete.” In this statement, the circuit being complete is a necessary condition for the bulb being lit up; if the bulb is lit up, we know the circuit is complete. But, and this is important, knowing the circuit will be complete does not guarantee the bulb will be lit up, because other necessary conditions are needed to make sure the bulb will light up, such as the filament in the bulb being intact (not burnt out), as well as the glass of the bulb being intact (not broken or cracked). A necessary condition for there to be a fire is the presence of some combustible material. This is not the only condition needed for fire, but it is a necessary condition, in that, without it there can be no fire. So, as with all necessary conditions, we know that if we have a fire that there is also combustible material present — here, as with all conditionals, the antecedent is a sufficient condition and the consequent is a necessary condition. Just as above, if we said: “If there is a fire, then there is combustible material present,” and we affirm that there is indeed a fire, then we know combustible material is present (by modus ponens).

A sufficient condition, on the other hand, is all that is needed for something to be or for something to occur. For example, the fact that it is raining is a sufficient condition for the street being wet. In logic shorthand we would indicate a sufficient condition as follows: “p, only if q,” meaning that if q is true we can know that p is true because q is sufficient for p. (Note: “p, only if q” would be written as q –> p since sufficient conditions make up the antecedent of a conditional and necessary conditions make up the consequent. The mnemonic SUN is helpful for remembering this, where the “U” in the middle is inverted to make the conditional horseshoe.) Necessary conditions, unlike sufficient conditions, do not guarantee a certain conclusion. In our first example we said a complete circuit was a necessary condition for the bulb to be lit up — from this we can infer that if we have a lit bulb we have a complete circuit (because a lit bulb is a sufficient condition for having a complete circuit), but we cannot infer from knowing we have a complete circuit that we have a lit bulb (for instance, if the bulb is obscured from our vision) because other necessary conditions must be present to ensure the bulb will light up. In other words, we cannot make a deductive inference from a single necessary condition alone. In section 2.4 we will consider conditions that are both necessary and sufficient.

Section 2.4: Biconditional Statements – Statements Involving both Necessary and Sufficient Conditions

A statement that uses the conjunction “if and only if”(abbreviated iff) is known as a biconditional statement. A biconditional statement takes this form: “p, if and only if q,” which is equivalent to saying: “if p, then q, AND if q, then p.” In such a statement p can only be true if q is true and q can only be true if p is true, that is, both the antecedent and the consequent must share truth values for the argument to be valid. So, p is a necessary and sufficient condition for q, and q is a necessary and sufficient condition for p. (Note: sometimes alternative wording is used, such as: “p just in case q;” “p precisely if q;” or “p is equivalent to q.”)

Let’s take a look at an example of a biconditional statement in an argument:

P1: The seed in the petri dish will sprout if and only if it is exposed to water.

P2: The seed is exposed to water.

C: Therefore the seed will sprout.

In this example the presence of water is both a necessary and a sufficient condition for the seed to sprout. The seed will not sprout without water (it is necessary) and it is all that is required for it to sprout (it is sufficient). Note that we could also say that a sprouted seed was a necessary and sufficient condition to indicate the presence of water in our little petri dish. Now contrast the above with the conditional statement: “If the dog barks then an intruder is at the door.” This statement says that an intruder being present at the door is a sufficient condition for the dog to be barking, but it is not a necessary condition, i.e., there could be another reason why the dog is barking.

Section 2.5: Analytic Propositions vs. Synthetic Propositions and Different Forms of Knowledge and Truth

Now let’s consider an important difference between propositions. The German philosopher Immanuel Kant famously laid out what is known as the analytic-synthetic distinction in his work titled The Critique of Pure Reason. First let’s consider analytic propositions: an analytic proposition is expressed by a subject-predicate statement in which the predicate concept is definitionally part of the subject concept. For example, the statement “All triangles necessarily have exactly three sides,” expresses an analytic proposition because a triangle is defined as a plane figure or a two-dimensional shape with exactly 3 sides (and exactly 3 angles). So, an analytic proposition is intended to be definitionally true, and for this reason analytic propositions are sometimes said to be trivially true. The way Kant put it was to say that in an analytic proposition the predicate concept is “covertly contained” in the subject concept.1

In contrast, a synthetic statement is a subject-predicate statement which makes a claim about how the subject relates to the world. A synthetic statement is true only if what is claimed corresponds with reality. An example of a stated synthetic proposition is: “All dinosaurs are extinct.” Such a claim is true, if indeed, all dinosaurs are extinct. The distinction between synthetic and analytic propositions is important because it relates to how we can obtain knowledge.

Section 2.5.1: Forms of Knowledge

There are 3 proposed forms of knowledge in philosophy: a priori, a posteriori, and innate knowledge. (Revelatory, aka revealed, transcendent, or divine knowledge is considered a form of knowledge by religious philosophers and theologians, but I won’t cover it here because it assumes the existence of unverifiable or unfalsifiable entities, and therefore has no place in a discussion of logic.)

A posteriori (ah pahst-ear-ee-or-ee) knowledge is dependent upon experience, such as simple observation or an empirical observation, like a scientific study. The statement “cats are vertebrates” is an example of a posteriori knowledge because it is dependent upon observing what a cat is like. The philosophers who hold that we can only gain non-trivial knowledge through experience are known as empiricists.

A priori (ah pre-or-ee) knowledge is said to be deduced from logic alone, independent of experience. The statement “all fathers have had children” is an example of a priori knowledge, since it can be determined through logical reasoning – you need not observe many fathers to know that they all have children, since the predicate concept of “having children” is contained in the subject concept, i.e., it is definitionally true. The philosophers who hold that we can only completely trust a priori knowledge, i.e., knowledge gleaned through reason, independent of experience, are known as rationalists. To clarify this concept a bit it should be stated that one may need experience to know what a father is, but one’s justification for knowing that fathers have children isn’t based on experience, according to rationalists. In other words, a priori knowledge may require experience, but a priori reasoning is independent of, or prior to, experience.

Innate knowledge should be contrasted from a priori knowledge, because although the concept may appear similar it is not. Innate knowledge is said to be present in a mind before any experience, so that it is completely independent of experience. For example, instinct, such as the sea turtle’s instinct to move toward the ocean after hatching, might be considered a type of innate knowledge. Those who hold that there is innate knowledge are known as nativists.

The divide between empiricism and rationalism centers on whether a priori knowledge can be justified independent of experience, and if it states anything other than trivial truths or tautologies. Radical empiricists deny that a priori knowledge is a form of knowledge. In regards to the distinction between analytic and synthetic propositions, and how they relate to the forms of knowledge, it can be said that it is somewhat uncontroversial to claim that we can know or verify analytic propositions a priori, but it is controversial to claim that we can verify synthetic claims a priori; in contrast it is not controversial to say that we can verify synthetic propositions a posteriori. Immanuel Kant famously held that synthetic a priori knowledge was possible, e.g., math, the categorical imperative.

Section 2.5.2: Types of Truth

There are said to be two types of truth: necessary truths and contingent truths. A necessary truth cannot be false. For example, 2 + 2 = 4 is regarded by most philosophers as being necessarily true – there is no way it could be false. Most philosophers, but not all, regard analytic statements and valid logical statements, such as, “If P then Q; not Q; therefore not P,” as being necessarily true. (Note: Kant considered knowledge of arithmetic to be synthetically derived, so he would not be among these.)

A contingent truth is a way the world the world is, but we can logically conceive of it being a different way. In other words, it is possible it could have been a different way if the circumstances were different. An example of a contingent truth is that George W. Bush was elected president of the United States in the year 2001 – this is a fact, but about our world, but it is possible the election would have turned out differently had something occurred prior to 2001 to change the course of events. One could say that there is “possible world” where George W. Bush was not elected president, but the notion of possible worlds is contentious. In contrast, necessary truths would be true in all possible worlds.

Section 2.6: Induction vs. Deduction vs. Abduction

All of the arguments we have examined up to this point have been deductive arguments, that is, they are arguments where we are supposed to be able to reach a certain conclusion, based on what is assumed to be true in the premises, or what is definitionally true about them, but most of our real-life questions will involve inductive or abductive reasoning, that is, attempting to reach conclusions that are not certain, but are most likely to be true.

Inductive Reasoning

In a “good” or strong inductive argument the premises should give us strong evidence to believe the conclusion is true, or, more accurately, the premises should contain a sufficient amount of reliable evidence to make the conclusion highly probable. An inductive argument that fails to provide strong evidence for its conclusion is known as a weak inductive argument, which, by way of analogy, can be like an invalid deductive argument, in that something is wrong with the method of inference, or it can use faulty reasoning, i.e., it may contain an informal fallacy (see Section 3 below). An inductive argument that is strong, and has true premises, is a cogent argument, that is, it ensures that the conclusion is most likely to be true, or, in other words, that we can have a great deal of confidence in the veracity or truthfulness of the conclusion. (Note: cogency in inductive arguments is analogous to soundness in deductive arguments.) Every conclusion from a cogent inductive argument is open to being disproven if counter-evidence is presented, that is, it is self-correcting, and does not make claims to certainty. The sciences commonly use inductive reasoning to analyze data and give us scientific theories which are rooted in well established truths or facts (an interpretation of empirical data that has an extremely high probability of being true), which we can have a great deal of confidence in, unless overwhelming contrary evidence is presented.

Here is an example of a weak (analogically equivalent to invalid in deductive reasoning) inductive argument:

P1: All of the people I have met with blue eyes know how to play the guitar well.

P2: Bill has blue eyes.

C: Therefore Bill probably knows how to play the guitar well.

This is a weak inductive argument because we cannot confidently infer the conclusion from the premises. There are a couple problems with such an inference: first, it is an example of the hasty generalization fallacy, the number of people one person has met does not usually meet the criteria for a sufficient sample size (see section 4 on understanding statistics); secondly, a sample comprised of people that one person has met is not likely to be sufficiently random – for example one could live in a town where, for whatever reason, an exceptionally large percentage of people take guitar lessons – but the argument here does not say where Bill is from. Because of these inferential problems we cannot say with any confidence that Bill probably knows how to play the guitar well. If fallacies occur in inductive logic they are often informal fallacies, which we will discuss below, but they may also be formal in nature.

Historically, inductive reasoning has faced some criticism based on what some perceive to be a “problem of induction”, but this is largely based on a definition of inductive reasoning that seeks to “prove too much”, i.e., a definition which claims that induction can prove something to be true. Induction can only prove to what degree something is probable, given that that one is using an appropriate methodology. For example, imagine a scenario where a person starts blindly picking gumballs out of a jar of 100 gumballs, and shaking the jar to randomly distribute the gumballs between picks. If the person picks 20 red gumballs in a row there is a somewhat weak probability that the next gumball they pick will be red.

If they have picked 99 red gumballs in a row, then there is a very high probability that the next gumball they pick will be red. In neither case is it proven that the next gumball they pick will be red. As Bertrand Russel explained with his example, which used swans instead of gumballs, if the 100th gumball turns out to be blue, it does not disprove the principle of induction, it merely shows that something improbable has happened.2 So, in summary, induction cannot prove anything with absolute certainty, it can only show probability, and counter evidence does not show the principle of induction to be false, it only shows that an improbable event has occurred.

A much debated topic in epistemology centers on how it is that we know the principle of induction, that is, if we do indeed know it. Some like, Russell, argued that we can know the principle of induction by reason, whereas others, like Hume, argued that we cannot know it by reason, since reason can only pertain to “relations of ideas”, like the relation of ideas that make up this true statement about a triangle: all triangles have three sides.2, 3 Any knowledge beyond relations of ideas requires experience, and we cannot use experience to prove the validity of a principle grounded in experience, for to do so would be to employ circular reasoning. Regardless of this ongoing debate, the principle of induction is considered a sound principle on practical grounds – it would be hard for one to argue that the methodology of science, which is based on the principle of induction, hasn’t proved to be an extremely successful and valuable method for arriving at knowledge of the universe.

In scientific study we use the principle of induction, but we often do not have as clear cut of a case as in the gumball jar scenario. For example, if we are checking to see how many people out of a population have blue eyes we probably do not have the practical ability to survey the entire population, but what we can do is survey a large enough number so that we have an appropriate sample size, and attempt to randomize the survey so that we are not getting poor data by selecting only from a portion of the population that might have uniformity that isn’t found throughout it. We’ll talk more about these concepts of sample size and randomness in the section on statistics.

Abductive Reasoning

Abductive reasoning attempts to form the most simple, likely, or plausible explanation or hypothesis which can explain the observance of some phenomenon, given an incomplete data set. This is often referred to as an “inference to the best explanation.”4 The general rule of thumb used in abductive reasoning is known as Ockham’s razor, or the idea that the best explanation, that is, the one most likely to be true, is the one which is the simplest or most plausible given what we already know. Abductive reasoning, like inductive reasoning, is commonly employed in science in order to arrive at hypotheses that are most likely to be true, though not guaranteed to be so. Empiricism, or testing of these hypotheses (using inductive logic), will later confirm whether they are actually – to the best of our knowledge – in accordance with reality.

A particularly illustrative example of abductive reasoning can be seen in medicine, where the physician uses abduction to first make differential diagnoses (i.e., a list of conditions that are known to produce the signs and symptoms which the patient is presenting with) that are possible given the limited information available to the physician; subsequently, the physician then pares the list of differential diagnoses down to a singular diagnosis by ruling out competing diagnoses. A common saying in medicine is “When you hear the beating of hooves first look for horses, not zebras,” that is, first you should look for what is likely or plausible, not for something which is exotic or unlikely (obviously, this saying would only make sense in areas where horses are more common than zebras). According to the principle of abduction expressed by this common saying, the diagnostician should rank their differential diagnoses by likelihood and start at the top in their investigation, moving downward as each possibility is ruled out, until one can be confirmed by diagnostics tests.

In my experience working as a veterinary nurse I witnessed a mistake of abductive reasoning made by a doctor. In this case, the patient, an adult dog, had unexplained anemia, which could not be explained by ectoparasites (fleas, ticks, mites, etc.), malnutrition, or an obvious disease process. The doctor arranged for the patient to be brought back in to go under general anesthesia for a bone marrow biopsy, suspecting that bone marrow suppression was the cause of the anemia. I happened to be working the day the animal was having the procedure done and noticed that we had not done a fecal analysis on the dog in years. I found some stool in the dog’s kennel and quickly ran an analysis on it. Sure enough, there were hookworm ova present in large numbers (hookworms are intestinal parasites consume the blood of their host). This was a simple, highly plausible explanation of the animal’s anemia which the doctor had overlooked before moving on to search for more exotic or less likely explanations.

Section 3: Informal Fallacies

Now let’s consider some informal fallacies and how they can make an argument unsound or non-cogent. If we look back to the last paragraph of section 1 we will see that an informal fallacy occurs when there is an error in one’s information or reasoning which makes the argument unsound, that is, unconvincing or implausible, or simply untrue. Below is a list of some of the most common informal fallacies. After understanding these fallacies you will find that they often crop up in debates and discussions. It is important to look at our own reasoning for these mistakes, and even our established views to see if we have used fallacious reasoning when arriving at them.

[Note: I will expand on some of these more than others due to the fact that some of these fallacies require further explanation. Some of the fallacies require extra explanation due to the fact that the reasoning they attempt to employ could be used in a non-fallacious way, if the argument is constructed correctly.]

Section 3.1: Fallacies of Relevance – appealing to something that is irrelevant

Argumentum ad hominem (aka ad hominem fallacy) – when one attacks the character of the person they are debating with, in an effort to discredit them, rather than their argument. Poisoning the well is a type of argumentum ad hominem where one attempts to discredit their opponent before they have made an argument, in hopes of discrediting everything they are about to say. The tu quoque fallacy (aka appeal to hypocrisy) is like a combination of a red herring and an argumentum ad hominem where one attempts to deflect attention away from addressing the argument at hand in order to bring up the hypocrisy of their opponent. This is considered fallacious, not because it isn’t sometimes important to point out the hypocrisy of one’s opponent, but because it draws attention away from the argument at hand. To reiterate, pointing out hypocrisy is not inherently fallacious, it is only fallacious when one does so in a way that diverts attention away from the argument at hand. If this is done intentionally it might be to divert attention away from an argument or issue they would rather not address, or in order to discredit one’s opponent so that it would seem that their argument is wrong by default. Likewise, it may be important to introduce the fact that your opponent’s character or habitual behavior indicates that they are often deceitful or untrustworthy, but one should not expect to dismiss their opponent’s argument solely by doing so – one must also address their argument. Often times the character of one’s opponent or their hypocrisy is wholly irrelevant because it is so unrelated to the argument at hand. Clearly it can be the case that a person who is judged as being of poor character has presented a strong argument that is correct or at least worthy of consideration, and this is why these are fallacies fall into the category of fallacies of irrelevance.

(Fallacious) Appeal to authority – appealing to a authority is not always fallacious. For example, one can bolster their argument by pointing out that their argument is in line with the scientific consensus on the matter; this lends merit to their argument. An appeal to authority is fallacious only if it appeals to an illegitimate authority.

Consider this example of an appeal to an illegitimate authority: “My uncle has a PhD in biology and he thinks evolution is an elaborate hoax perpetrated by the government.” Citing the opinion of one supposed expert does not constitute a legitimate authority on a matter. One can always find an expert to agree with their conclusion. For example, there are always a extreme minority of people with advanced science degrees who disagree with the vast majority of their colleagues on certain matters. If you look long enough you can even find experts who believe in the most absurd pseudoscientific theories ever conceived, such as young Earth creationism or evolution denial — fortunately, they are an extreme minority. [Note: this is not to say that a consensus opinion of experts absolutely cannot be wrong, or that no one should ever challenge such consensuses, but that the evidence required to do so must be extraordinarily strong. See: Sagan’s standard aka ECREE.]

The sheer fact that a singular expert is on your side does not lend merit or plausibility to your argument. Here is another example of a fallacious appeal to an illegitimate authority: “My naturopath told me that my cancer is being caused by toxins from non-organic food and that chemotherapy will only exacerbate this problem.” In this case the person is citing the opinion of someone who is not an actual expert, that is, they are not an actual medical doctor (naturopathy is not an evidence-based field and is widely considered to be pseudoscience).

Fallacy of Relative Privation (or Appeal to Worse Problems) — the fallacy of relative privation occurs when someone dismisses or downplays the importance of a problem or issue by comparing it to other seemingly more significant or severe problems. This fallacy suggests that because there are more significant or dire problems in the world, the lesser problem should be ignored, disregarded, or considered unimportant in comparison. The fallacy relies on the notion that attention or resources should only be allocated to the most severe issues, and any focus on lesser problems is unwarranted or trivial.

However, this fallacy is flawed because it assumes that attention and resources cannot be distributed across multiple problems or issues simultaneously. It overlooks the fact that problems can exist on a spectrum, and their significance or impact should be assessed based on their own merits rather than solely in relation to other problems. Each problem or issue can have its own unique consequences, and dismissing one problem because there are more significant problems fails to acknowledge the importance of addressing multiple issues in their respective contexts.

Furthermore, the fallacy of relative privation can also undermine empathy and compassion by suggesting that individuals should only focus on the most severe problems, neglecting the importance of addressing smaller-scale issues that may still have significant impacts on individuals or communities.

Recognizing this fallacy allows us to avoid dismissing or devaluing problems based on their perceived significance compared to others. It encourages a more nuanced and comprehensive approach to addressing various issues rather than engaging in a hierarchy of importance based on relative severity.

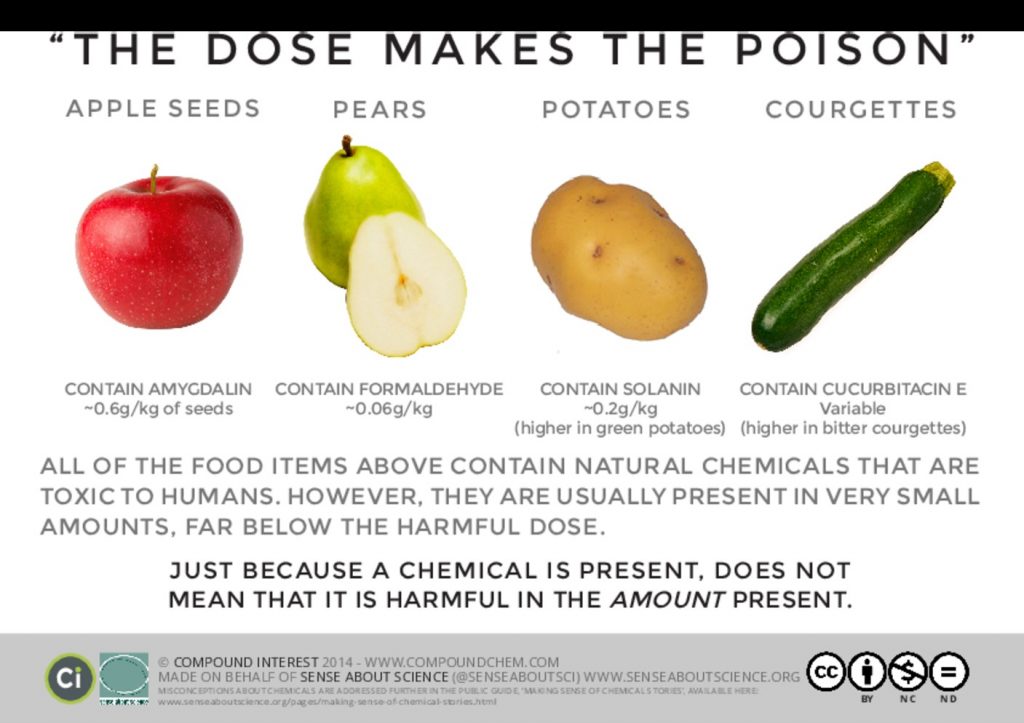

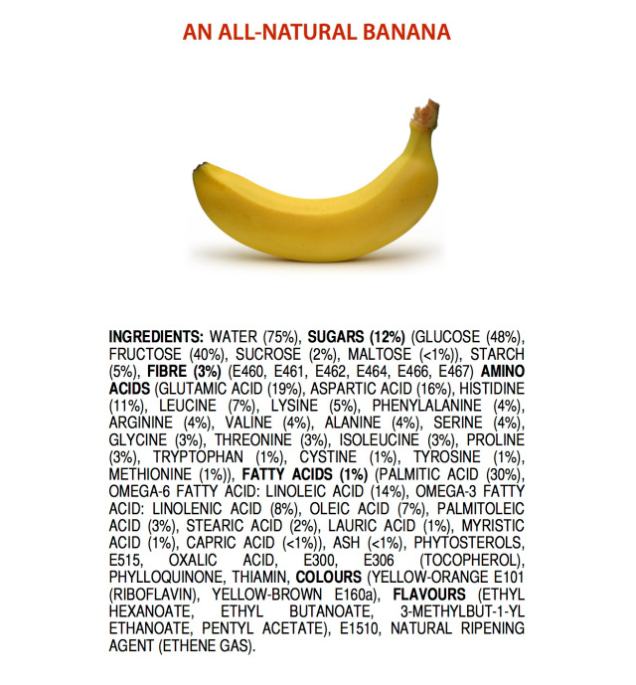

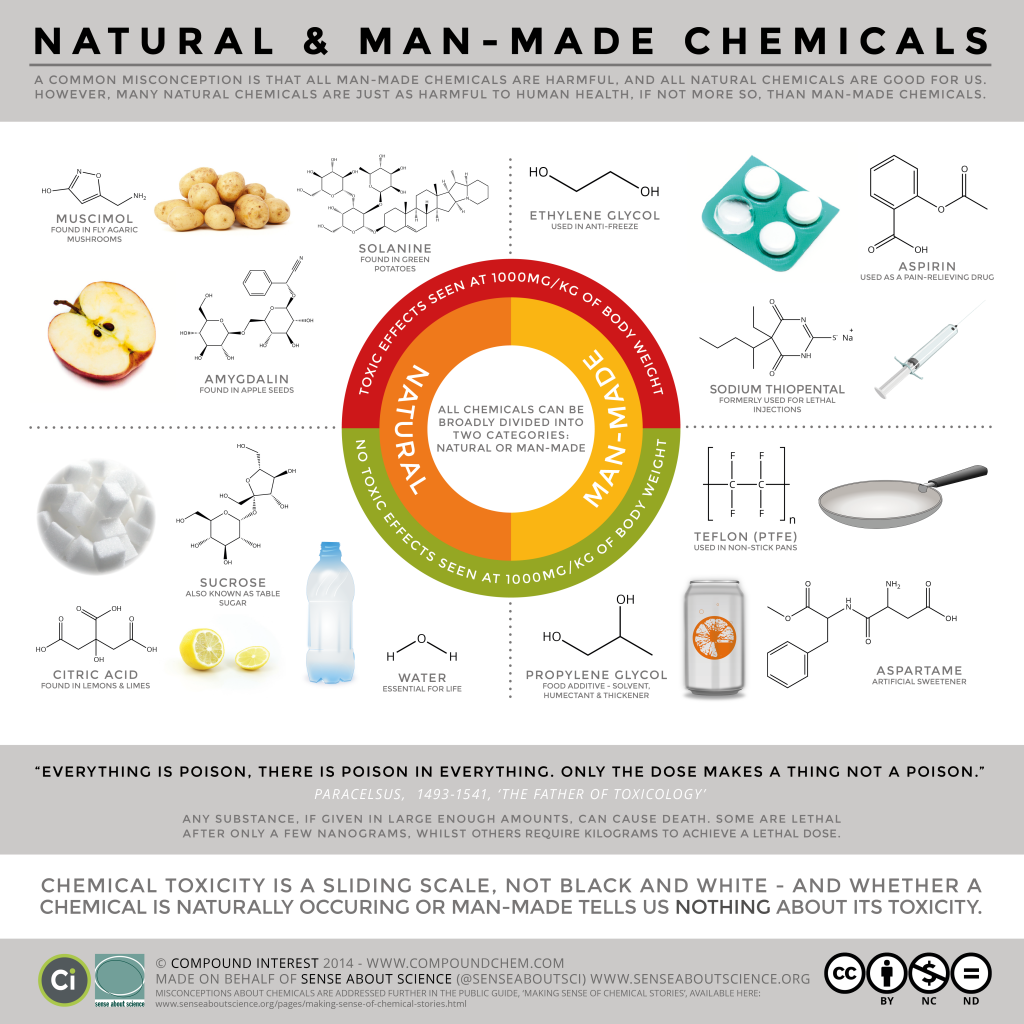

Appeal to nature fallacy (sometimes mistakenly referred to as the naturalistic fallacy) – one makes a fallacious appeal to nature if they consider something to be “good” – either morally or otherwise – based solely on the fact that they consider it to be “natural”. This fallacy is most commonly found in ethical arguments, but it is sometimes used in other contexts, as well. The most fundamental problem associated with this fallacy is the fact that the distinction between natural and unnatural is not clear. In ethical contexts some want to draw a line between human civilization and the natural world, but it is not clear why any line should be drawn or where it should be drawn. Certain complicated questions arise: What is meant by the word “nature” or “natural”? Do humans somehow exist outside of nature because they can manipulate the environment in certain ways that other animals cannot, or to a degree which they cannot?

In non-ethical contexts some want to draw a line between technologies or chemicals which they consider to be natural and technologies or chemicals that they consider to be unnatural. In regards to chemicals this often takes the form of describing substances they view to be harmful as “chemicals”, and regarding all neutral or beneficial substances as non-chemicals – a view which makes no sense considering that all ordinary matter (as opposed to hypothetical forms of matter, such as dark matter) is comprised of chemicals (chemistry is simply the study of matter, and all matter is comprised of chemicals). [Note: It is much more accurate and clear to use say that something is a toxin or that it is toxic when you want to dissuade persons from coming to contact with it.] Again, it is hard to make a clear distinction since nearly all of the material objects (ordinary matter) we encounter in our lives are made up of elements and compounds that naturally occur in the universe. Of the relatively few synthetic elements which can be produced in a lab, most degrade extremely rapidly or are radioactive (there are many radioactive elements which occur naturally as well), and we are unlikely to ever encounter these. Usually when people say something is natural they mean that it readily occurs in an environment without any sort of human interference, but unbeknownst to most of these individuals such a definition would include many chemicals which they would probably consider unnatural, e.g., pyrethrin, salicylic acid (used to make aspirin), amygdalin (turns into cyanide in the body), morphine, penicillin, etc., etc.

Images from: http://jameskennedymonash.wordpress.com/category/infographics/ingredients-infographics/

In an ethical context an example of the appeal to nature fallacy would be when a person argues that an action is morally good or right because it occurs in nature or because it is natural, or conversely that something is bad because it is unnatural. Not only does this argument face the problem with defining natural vs. unnatural, but it is also a fallacious assumption because it is based on a flawed premise. Let’s look at an argument that employs this fallacious reasoning in basic form:

P1: An action is morally good or right if it is natural.

P2: Action X is natural.

C: Therefore action X is good.

The first premise here is flawed because it is an instance of question begging. Question begging is a broad category of fallacious reasoning in which the conclusion of the argument in assumed in one of the premises. When this type of flawed reasoning is employed we will usually find that one of the premises has the assumed conclusion hidden in it, so that it is not always readily apparent. Above we see that premise 1 is begging the question because it assumes that that which is natural is good – which is exactly the conclusion the arguer is attempting to prove, which makes the argument circular, and circular reasoning is always fallacious because neither the premise or the conclusion have been sufficiently proven. A circular argument is usually valid (as is the above example), but it fails to be cogent or sound – which is why it is considered to be an informal fallacy.

In less theoretical and more concrete terms we can see that this argument is flawed when it is used to make a moral argument. It is clear that many natural acts, such as infanticide, forced copulation, aggressive violence, etc., often occur in the natural world – and this is the case whether we are looking at pre-social contract human communities or groups of non-human animals. None of these acts conform to our moral intuitions or most modern, advanced societies’ mores and systems of law, and therefore a significant burden of proof is placed on the individual who wishes to argue that our moral intuitions, mores, and systems of law are actually wrong.

Outside of ethical contexts an appeal to nature is not always fallacious, if what is meant by “natural” and “good” is clearly defined from the outset. For example, an appeal to nature is not fallacious if it is merely used to demonstrate why members of a species have a predisposition for certain types of behavior – this is what evolutionary psychology often attempts to do – but if one wants to argue that these predisposed actions are morally acceptable or obligatory actions, they have to argue further to show why this is the case. It could be argued that in some cases it is a good rule of thumb to appeal to nature to make recommendations, such as, when looking at nutrition, medicine, psychology, etc., because of the “hardwiring” which evolution creates in our bodies. For instance, one could argue that a certain diet is better than another because it conforms more to what our bodies evolved to use for energy. In this instance an appeal to nature is not fallacious if it accounts for the fact that there are definite exceptions to this rule of thumb.

That said, fallacious appeals to nature can be made in these fields; for example some have argued that “natural” medicines (in this context natural usually means occurring readily in a certain wild environment and having a long history of use) are the best medicines to use because of their long history of use and due to the fact that they readily occur in an environment, but it is not always the case that such medicines really are the best to use, and in many cases, (as verified by scientific studies) newer, “synthetic” medicines work much better. In fact, many studies have shown that there is no evidence to support the efficacy of a large number of so-called natural medicines. Furthermore, it is clear that many natural occurring substances are deleterious to our health, so the fact that something is natural does not make it good by default.

Sometimes the appeal to nature fallacy is called the naturalistic fallacy, but this is not accurate. The naturalistic fallacy is what the philosopher G.E. Moore considered to be an erroneous equation of a natural property with goodness. Moore did not believe that the good could be defined in terms of some natural property such as pleasure because he thought that goodness was irreducibly simple. Moore believed he could prove the fallaciousness of equating the good with some natural property by use of his open question argument. See also: moralistic fallacy.

False analogy, also known as poor analogy or apples-to-oranges fallacy — is a logical fallacy that occurs when an analogy is drawn between two things or situations that are not truly comparable. The fallacy assumes that because two things share some similarities, they must be similar in other respects or have the same outcome. However, this assumption is flawed as it overlooks the important differences between the two things being compared.

Analogies are often used to help explain complex ideas by drawing parallels between familiar or simpler concepts. They can be useful for understanding new concepts or making a point more relatable. However, a false analogy arises when the similarities between the two things being compared are not significant enough or relevant to justify the conclusion being drawn.

When encountering a false analogy, it is important to critically evaluate whether the shared similarities are truly relevant and whether the differences between the two situations outweigh the similarities. A valid analogy requires that the shared attributes or circumstances being compared are sufficiently similar to warrant drawing meaningful conclusions.

By recognizing and avoiding false analogies, we can ensure that our reasoning is based on valid comparisons and sound logical principles, allowing for more accurate and reliable conclusions.

Red Herring – a “red herring” is an argument or assertion that is irrelevant to the current issue under consideration. Committing this fallacy can be intentional or unintentional. For example, consider a debate about marijuana legalization: in this debate person A is arguing that legalizing marijuana would bring in tax revenue for the state and this constitutes a reason to legalize it. In response, person B argues that “taxes are theft” and the government shouldn’t be extracting taxes from its citizens anyway. In this case person B has employed a red herring fallacy that distracts from the issue at hand.

Section 3.2: General Informal Fallacies

Strawman fallacy – the strawman fallacy occurs when someone misrepresents or distorts their opponent’s argument in order to make it easier to attack or refute. Instead of addressing the actual position or argument put forth by the other person, the fallacy involves creating a weaker or distorted version of it and then refuting that misrepresentation. By attacking a strawman, the person using this fallacy avoids engaging with the strongest form of the opposing argument. It is essential to accurately represent and understand the arguments of others in order to have productive and meaningful discussions.

Nirvana fallacy (aka perfect solution fallacy) – a fallacious tactic used, usually in debates about ethics, to discredit an argument’s conclusion – usually a proposed solution to a problem – because that solution is not a perfect solution. This fallacy gets its name from the Buddhist idea of a perfect state of being or enlightenment – Nirvana. For a simple example of this fallacy, let’s consider a situation where people are debating ways of reducing automobile-related fatalities. One person in this debate, let’s call her Mary, might argue that wearing seat belts should be mandatory to reduce fatalities. If another person, Joe, argues that Mary’s proposed solution shouldn’t be considered because people can still die in car accidents while wearing seat belts, then he is using a nirvana fallacy to discredit her proposed solution. Joe could argue against Mary’s proposal by offering a better solution, or by showing why her proposal will not work, or why he thinks it will cause more harm than good, but he cannot simply discredit it by saying that it isn’t a perfect solution. Obviously, because we know that seat belts do reduce the incidence of automobile crash-related fatalities, Mary’s proposal, although not capable of creating a perfect, zero-fatality situation, is better than no proposal at all.

Post hoc ergo propter hoc (False Cause) — this fallacy, also known as the fallacy of false cause or correlation implies causation fallacy, occurs when it is assumed that because one event follows another, the first event must have caused the second event. In other words, it incorrectly assumes a causal relationship solely based on the temporal sequence of events. However, correlation does not necessarily imply causation. There may be other underlying factors or coincidences that explain the observed relationship. It is important to consider alternative explanations and seek evidence beyond mere temporal association when making causal claims.

Argument by verbosity (Gish gallop, shotgun argumentation) — this fallacy involves overwhelming an opponent or an audience with a rapid barrage of numerous arguments, often delivered in a fast-paced, verbose manner. By presenting a large quantity of arguments, the person employing this fallacy seeks to make it difficult for their opponent to address or refute each point effectively within a limited time frame. The aim is to create an impression of strength and credibility by sheer quantity rather than the quality or validity of the arguments presented. This fallacy can be deceptive, as it relies on overwhelming opponents rather than engaging in substantive debate or addressing the core issues at hand.

Question begging (assuming the conclusion) – making an argument in which one or more of the premises assumes that the conclusion of the argument is true. Often this assumption is implicit or concealed so that it is not easily seen. A loaded question is related to question begging, in that a loaded question presumes something to be true in an attempt to limit the response of whoever is being interrogated.

Weasel words — weasel words refer to words or phrases that are intentionally vague, ambiguous, or misleading in order to evade or obscure the truth or to create a false impression. They are often used in advertising, politics, or persuasive writing to make claims that sound meaningful or significant but lack substance or clarity. Weasel words can give the impression of making a strong statement while allowing for multiple interpretations or providing an easy way out if challenged for evidence or specifics.

Wrong direction of causal flow — this fallacy occurs when the cause and effect relationship between two events or variables is reversed or misunderstood. Instead of recognizing the actual cause and its resulting effect, this fallacy mistakenly attributes the effect as the cause or vice versa. It overlooks the proper sequence of events and can lead to incorrect conclusions or faulty reasoning.

Tu quoque (aka the appeal to hypocrisy, whataboutism) — the tu quoque fallacy is a diversionary tactic that involves dismissing an opponent’s argument or criticism by pointing out their own hypocrisy or inconsistency. Instead of addressing the substance of the argument, this fallacy attempts to discredit the opposing viewpoint by suggesting that the person making the argument is not consistent with their own beliefs or actions. While hypocrisy can undermine credibility, it does not necessarily invalidate the merit of an argument. It is important to evaluate arguments based on their own logical and evidential support, rather than solely focusing on the behavior or character of the person making the argument.

Argument to moderation (aka golden mean fallacy) — the argument to moderation fallacy occurs when it is assumed that the truth or correctness lies somewhere in between two extreme positions simply because it represents a compromise or a middle ground. This fallacy ignores the possibility that one extreme position may be more valid or supported by evidence than the other, or that the truth may lie outside the spectrum of the two presented positions. It is important to evaluate arguments based on their own merits and evidence rather than assuming that a compromise between two positions is inherently correct or reasonable.

False dilemma — this fallacy, also known as the false dichotomy, occurs when someone presents a situation as having only two options or choices, when in reality, there are other alternatives or possibilities. It oversimplifies a complex issue by limiting the options to an either-or scenario, ignoring potential middle grounds or alternative solutions. While there may be situations where choices are limited, it is important to recognize that many real-world problems often have multiple nuanced options.

Appeal to emotion — this fallacy involves manipulating emotions, such as fear, sympathy, or anger, to support or reject a claim or argument. Instead of relying on logical reasoning or evidence, this fallacy attempts to persuade by evoking an emotional response from the audience. Appeals to emotion can be powerful, but they do not necessarily provide valid or reliable justifications for an argument. It is essential to assess claims based on their merits and evidence rather than being swayed solely by emotional appeal.

Thought-terminating cliché — this fallacy refers to using a catchy phrase or slogan to dismiss or end a discussion or debate, preventing further exploration or critical thinking. It aims to shut down opposing viewpoints by employing a cliché or a seemingly profound statement that implies the matter is settled or beyond questioning. The example you provided, “God works in mysterious ways,” is a common thought-terminating cliché used to deflect inquiries about the logic or reasoning behind a religious belief. While such statements may serve to satisfy individuals on a personal level, they do not provide substantive explanations or address the underlying issues being discussed.

Bandwagon fallacy (argumentum ad populum or appeal to the majority) — this fallacy occurs when someone argues that a claim must be true or acceptable because a majority of people believe or support it. The mere fact that a large number of people believe something does not make it inherently true, as popular opinion is not a reliable indicator of truth or validity. The strength of an argument should rely on evidence, reasoning, and logical consistency rather than the number of people who hold a particular belief.

Slippery slope — the slippery slope fallacy is characterized by suggesting that a particular action or event will inevitably lead to a series of increasingly negative consequences, without sufficient evidence or logical support. It assumes a chain reaction where a single action will set off a sequence of events that leads to an undesirable outcome. While causal relationships and consequences do exist, it is important to critically evaluate the specific evidence and logical connections to determine if the predicted sequence of events is truly likely or merely speculative.

Ad ignorantum (Appeal to Ignorance) or Burden of Proof Reversal — this fallacy occurs when someone asserts that a claim is true because it has not been proven false, or vice versa. It shifts the burden of proof onto the person questioning the claim rather than the person making the assertion. For example, if someone claims to have $10 in their pocket and another person expresses doubt, the burden of proof is on the person making the claim to provide evidence of their assertion. Merely stating that the other person cannot prove the claim false does not provide sufficient evidence to support the original claim.

Non-sequitur — a non-sequitur is a logical fallacy in which a conclusion or inference does not logically follow from the premises or evidence presented. It is a statement or argument where the connection between the claim and the supporting evidence is missing or irrelevant. In a valid argument, the conclusion should logically flow from the premises. A non-sequitur undermines the logical coherence and validity of an argument by introducing unrelated or inconsequential information or making unsupported leaps in reasoning.

Section 3.3: Erroneous generalizations and inductive fallacies

Hasty generalization fallacy — the hasty generalization fallacy occurs when a conclusion or generalization is drawn from a limited or insufficient sample size. It involves making a sweeping statement about a whole group or population based on only a few examples or instances. By relying on a small number of observations, this fallacy overlooks the potential variations or diversity within the group being generalized. Hasty generalizations can lead to stereotypes or unfair judgments, as they fail to account for the full range of possibilities or adequately represent the entire population.

Gambler’s fallacy — the gambler’s fallacy, also known as the Monte Carlo fallacy, is the mistaken belief that the probability of an event occurring is influenced by previous independent events, particularly in games of chance. It occurs when someone assumes that past outcomes affect future outcomes, despite each event being statistically independent. For example, in a game of roulette, if the ball has landed on black several times in a row, the gambler’s fallacy suggests that red is “due” to appear next. In reality, the odds of the ball landing on red or black remain the same on each spin, regardless of past outcomes. The fallacy arises from a misunderstanding of probability and randomness.

Strategies for Forming a Cogent Argument

Define your terms first!

Defining terms is crucial in philosophical argumentation for several reasons:

- Clarity: Philosophical discussions involve the exploration of abstract and complex ideas. Defining terms at the outset helps to establish a shared understanding of the concepts being discussed. Clear and precise definitions ensure that everyone involved in the discussion is on the same page and avoids confusion or misunderstandings. It provides a solid foundation for engaging in meaningful and productive discourse.

- Precision: Philosophy often deals with nuanced distinctions and subtle differences in meanings. By defining terms, philosophers can specify the scope and precise connotations of the concepts they are using. This precision helps to avoid ambiguity and ensures that arguments are built on accurate and well-understood premises. It also allows for a more precise examination of the implications and logical consequences of the arguments being made.

- Rigor: Philosophy strives for logical rigor and sound reasoning. Defining terms is an essential step in constructing well-formed arguments. By providing clear definitions, philosophers can establish the boundaries and parameters of their arguments, ensuring that the reasoning remains focused and coherent. Without clear definitions, arguments can become muddled or open to interpretation, weakening the logical strength of the overall position.

- Avoiding Strawman Fallacy: Failing to define terms can lead to the strawman fallacy, where an opponent misrepresents or distorts the original argument by assigning different meanings to the terms used. By defining terms upfront, philosophers can mitigate the risk of misinterpretation and misrepresentation, fostering more accurate and respectful engagements with opposing viewpoints.

- Progression of Discussion: Defining terms helps in guiding the progression of philosophical discussions. It provides a framework for examining the implications and consequences of particular definitions and allows for the exploration of alternative definitions or counterarguments. This facilitates a more systematic and structured exchange of ideas, enabling participants to delve deeper into the subject matter and generate more meaningful insights.

Reductio ad absurdum (aka argumentum ad absurdum) – this is a form of argument whereby one tries to prove that an argument or statement is true, or likely to be true, by showing that its negation (denial) would lead to an absurd or false conclusion or a contradiction; or, conversely, where one tries to prove that an argument or statement is false, or likely to be false, by showing that its affirmation, or its acceptance as truth, would lead to an absurd or false conclusion or a contradiction. “Absurdity” essentially means a conclusion that is implausible, improbable, a contradiction, or logically impossible.

Example 1: If it were false that my grandpa was in the army in World War II, then he would have had to fake all those pictures of him in uniform with other soldiers, and all the letters written to grandma about his experiences.

Explanation: This reductio ad absurdum does not prove that grandpa was in the army, but it does show that it is very likely that he was, because the conclusion that follows from the denial that he was in the army is highly implausible (assuming we have no evidence to suggest that grandpa is an extremely skilled conman, that is).

Example 2:

P1: If it were true that every person who works hard will get rich, then all the people working full-time at the town factory would be rich.

P2: It is true that everyone who works hard will get rich.

C: Everyone working full-time at the factory is rich.

Explanation: It is a fact that everyone who works at the town factory is not rich. If we assume P1 and P2 are true we arrive at a false conclusion, therefore it cannot be true that hard work guarantees that one will be rich.

(Note: In formal symbolic logic the reductio ad absurdum is known as an “indirect proof” or “not [~] introduction”. In a logical proof using indirect proof [IP] one assumes the negation of the conclusion [or, sometimes, a negation of one of the premises] and then shows that it leads to a contradiction. This proves that the conclusion is correct.)

One thing to be cautious of when using a reductio ad absurdum is to make sure not to create a strawman of the argument you are trying to refute.

In fact, we want to steelman arguments which we are examining or arguing against. Steelmanning (or rigorously applying the principle of charity) is the practice of actively seeking out the strongest and most compelling version of an argument, even if it is different from our initial understanding or viewpoint. Instead of attacking a weak or distorted version of an opponent’s argument (strawman fallacy), steelmanning involves honestly representing and engaging with the best possible interpretation of their position.

Steelmaning is important for several reasons. Firstly, it promotes intellectual honesty and fairness in discussions by treating opposing arguments with respect and sincerity (arguing in good faith). By engaging with the strongest version of an argument, we can challenge our own beliefs, test the robustness of our positions, and foster a more genuine exchange of ideas.

Secondly, steelmanning allows for a deeper understanding of different perspectives. By seeking out the strongest form of an argument, we can identify potential merits, uncover underlying assumptions, and uncover nuances that may have been overlooked. This process expands our knowledge and contributes to a richer exploration of the subject matter.

Additionally, steelmanning enhances the overall quality of discourse and facilitates more productive conversations. It helps to build bridges between different viewpoints, promoting constructive dialogue and the potential for finding common ground or resolving disagreements. By addressing the strongest version of an argument, we can focus on substantive issues, identify shared concerns, and work towards greater understanding or possible solutions.

Steelmanning is part of arguing in good faith, which means engaging in a discussion or debate with sincerity, honesty, and a genuine intention to seek truth or reach a resolution. It involves approaching arguments and opposing viewpoints with an open mind, being willing to listen, consider alternative perspectives, and engage in constructive dialogue.

Section 4: Understanding the Basics of Statistics

Statistics is a branch of mathematics that deals with the collection, analysis, interpretation, presentation, and organization of data. It provides tools and methods to make sense of data and draw meaningful conclusions from it. In this introduction, we will explore three fundamental measures of central tendency: mean, median, and mode.

Mean (µ): The mean is often referred to as the average and is calculated by summing up all the values in a dataset and dividing the sum by the total number of values. It is commonly abbreviated as µ (the Greek letter “mu”). The mean represents the typical value in a dataset and is influenced by extreme values. It is used when you want to understand the average value of a set of data.

Median: The median is the middle value in a dataset when the values are arranged in ascending or descending order. If the dataset has an odd number of values, the median is the middle value itself. If the dataset has an even number of values, the median is the average of the two middle values. The median is useful when dealing with skewed distributions or when outliers can heavily impact the mean.

Mode: The mode is the most frequently occurring value in a dataset. A dataset can have no mode (when all values occur equally) or multiple modes (when two or more values have the highest frequency). The mode is useful for identifying the most common value or category in a dataset.

Population vs. Sample:

In statistics, a population refers to the entire group of individuals, items, or events that we want to study and draw conclusions about. It includes every possible member of the group. The symbol used to represent the population mean is µ (mu), and the symbol used to represent the population standard deviation is σ (sigma).

On the other hand, a sample is a subset of the population that is selected for observation and analysis. A sample is chosen to represent the population, as studying the entire population might be impractical or time-consuming.

Mean (Sample): The sample mean, denoted as x̄ (x-bar), is the average value of a set of data points in a sample. It is calculated by summing up all the values in the sample and dividing the sum by the total number of values (n). The formula for the sample mean is:

x̄ = Σx / n

Here, Σ (capital sigma) represents the sum of all the values in the sample, x represents individual values in the sample, and n represents the total number of values in the sample.

The sample standard deviation is represented by s (see the section on standard deviation below).

Mean (Population): The population mean, denoted as µ (mu), is the average value of a variable in the entire population. It is calculated by summing up all the values in the population and dividing the sum by the total number of values in the population (N). The formula for the population mean is:

µ = Σx / N

Here, Σ (capital sigma) represents the sum of all the values in the population, x represents individual values in the population, and N represents the total number of values in the population.

Section 4.2 Standard Deviation and the Bell Curve

The standard deviation measures the spread or variability of data points around the mean. It quantifies how much the values deviate from the average. In a bell-shaped normal distribution, the standard deviation determines the width of the curve. A smaller standard deviation indicates that the data points are closely clustered around the mean, while a larger standard deviation suggests more dispersion.

The bell curve, also known as a normal distribution or Gaussian distribution, is a symmetrical probability distribution that represents many natural phenomena. It is characterized by a specific shape where most data points cluster around the mean, with fewer values located farther away from the mean. The standard deviation helps define the width of the bell curve and plays a crucial role in determining probabilities associated with different ranges of values.

Understanding these statistical concepts and measures allows researchers, analysts, and decision-makers to gain insights from data, summarize information, compare different datasets, and make informed judgments based on the characteristics and patterns observed.

Section 4.3: Common Statistical Measurements

Z-scores and t-scores are common statistical measures used to standardize and compare data within a distribution. Here’s an explanation of each along with their formulas:

Z-Score: A z-score, also known as a standard score, represents the number of standard deviations a data point is away from the mean of a distribution. It allows us to assess the relative position of a data point within a distribution.

The formula for calculating the z-score of a data point (x) in a distribution with mean (μ) and standard deviation (σ) is:

z = (x – μ) / σ

In this formula, subtracting the mean from the data point and dividing it by the standard deviation yields the z-score.

A positive z-score indicates that the data point is above the mean, while a negative z-score indicates that it is below the mean. A z-score of 0 indicates that the data point is exactly at the mean.

Z-scores are useful for comparing values from different distributions, as they provide a standardized measure that can be used to assess relative positions and make meaningful comparisons.

T-Score: A t-score, similar to a z-score, is used to standardize data. However, it is specifically employed when dealing with small sample sizes or when the population standard deviation is unknown.

The formula for calculating the t-score of a data point (x) in a distribution with mean (μ), sample mean (x̄), sample standard deviation (s), and sample size (n) is:

t = (x – x̄) / (s / √n)

In this formula, subtracting the sample mean from the data point and dividing it by the sample standard deviation divided by the square root of the sample size yields the t-score.

T-scores are commonly used in hypothesis testing and when working with small sample sizes. They are based on the t-distribution, which has fatter tails compared to the standard normal distribution (z-distribution) to account for the additional uncertainty introduced by small sample sizes.

Both z-scores and t-scores provide a standardized way of comparing data points within a distribution, allowing for meaningful comparisons and assessments of relative positions.

Section 4.4: Statistical Techniques & Analyses

Analysis of Variance (ANOVA): ANOVA is a statistical method used to compare means between two or more groups to determine if there are significant differences. It assesses the variance between groups and within groups to make inferences about population means.

Chi-Square Test: The chi-square test is a statistical test used to determine if there is a significant association between two categorical variables. It compares the observed frequencies with the expected frequencies to assess the independence or relationship between the variables.

Regression Analysis: Regression analysis is a statistical technique used to model and analyze the relationship between a dependent variable and one or more independent variables. It helps to understand the impact of independent variables on the dependent variable and make predictions.

Factor Analysis: Factor analysis is a statistical method used to identify underlying factors or dimensions in a set of observed variables. It helps to simplify complex data and identify the underlying structure or patterns.

Reliability and Validity: Reliability and validity are concepts related to the measurement of variables. Reliability refers to the consistency and stability of a measurement instrument, while validity concerns the extent to which a measurement instrument measures what it intends to measure.

Correlation Analysis: Correlation analysis examines the relationship between two continuous variables. It measures the strength and direction of the linear relationship between variables using correlation coefficients such as Pearson’s correlation coefficient.

Works Cited:

- Immanuel Kant, Paul Guyer, and Allen W. Wood, Critique of Pure Reason, A6 – 7. Cambridge: Cambridge University Press, 1998.

- Russell, Bertrand. The Problems of Philosophy. New York: Oxford University Press, 1959.

- Hume, David, L. A. Selby-Bigge, and P. H. Nidditch. A Treatise of Human Nature, 89. Oxford: Clarendon Press, 1978.

- Igor Douven, “Abduction,” Stanford University, 2011, , accessed July 07, 2016, http://plato.stanford.edu/entries/abduction/.